Coding with OpenAI's New o1 Model and Cursor

My new process, which uses a variety of LLMs depending on the situation

Listen I’m no hype guy, but OpenAI’s o1 is very exciting. It’s no panacea for the limitations of LLMs, but it is great in some very specific use-cases. So far, for me, AI-assisted coding is an area where o1 makes a BIG difference.

After a weekend coding with the new o1 model, here’s my new AI-assisted coding process.

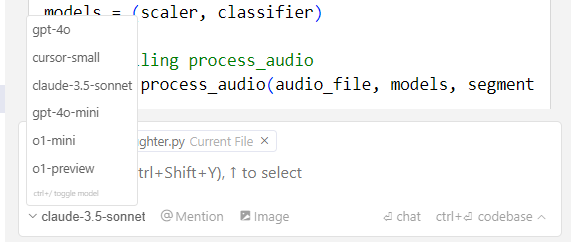

I use Cursor because it’s easy to switch between LLMs that way. I pay for Cursor Pro.

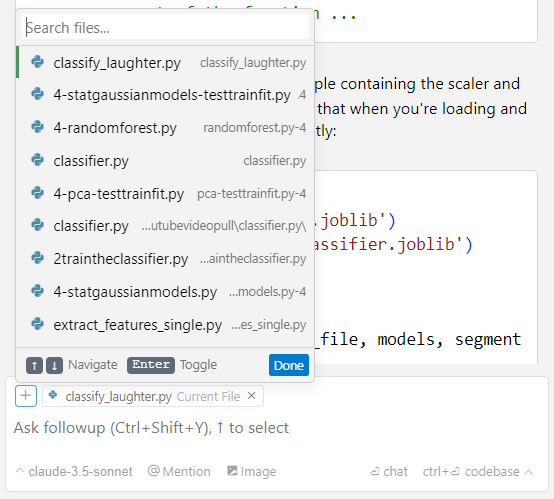

Before you ask a question or debug, always make sure your relevant files are open, and if you’re worried it’s missing context, make sure the relevant files are included in each chat- it tends to remove all but the current file.

And you can tell the LLM hasn’t referred to the relevant code when the LLM comes back with “if x is in your file” or there’s no “Apply” button in the upper righthand corner.

My Latest AI-Assisted Coding Process

I start with Claude 3.5 Sonnet or GPT 4o. If they are not able to produce working code with 2-3 debugs, I move to the next step. (I do want to go back and try cursor-mini again, since fast requests on that are unlimited.)

Here I try Perplexity, and sometimes give it the url of an api doc or something. Didn’t work? Next step.

I switch to o1-mini because you get 10 free of these a day, and beyond that it’s 10 cents a query (much better than o1-preview). If that doesn’t work, next step.

Switch to o1-preview as a last resort because it costs 40 cents a query! Is it worth it? Usted decide. When Claude and GPT are stuck and mucking it up, it certainly can be.

Bonus Tip: When Claude and GPT don’t have the latest documentation

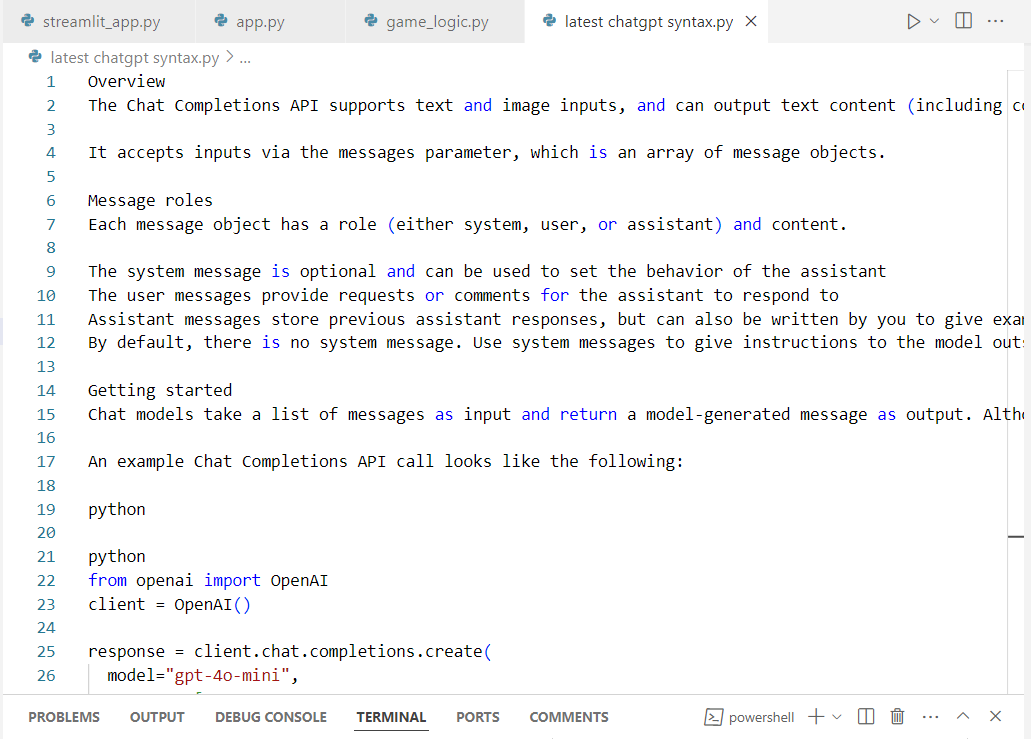

It’s maddening that the LLMs don’t have the latest syntax, functions, and endpoints. For example, OpenAI switched their chatcompletion endpoint, and if you don’t watch it, Claude and GPT 4o will replace the new working one with what they think it the latest and call the new one outdated- the nerve!

My solution is to copy and paste the relevant webpage text into a new text file in cursor, and add it to the relevant doc list when querying.

The LLM may be grudging and throw shade “if xxxx really is the latest endpoint….” but they’ll do it. Gee, thanks!

Always Be Saving

But the previous section also provides a hard lesson- when the LLM suggests code changes to integrate, read through ALL of it before accepting, and backup working versions of your code before making changes. That might mean doing very specific versioning, e.g. v0.06, 0.07, and so on. And if you integrate with GitHub, you may not need to worry about this so much.

Now What?

If you have a different process, I’d love to hear it!

For me, Cursor and all these LLMs are a great combo, very productive. Try them out!